Prompt management best practices

Here are our recommended best practices for managing your prompts. This guide will help you establish a robust workflow for developing, testing, and deploying prompts using LangSmith.

The prompt development lifecycle: iterating in the playground

We recommend treating prompt development as an iterative, experimental process. The LangSmith Playground is the ideal environment for this initial "development" phase.

Using the Playground, you and your team can:

- Rapidly iterate on prompts: Modify prompt templates and see how the changes affect the output immediately.

- Compare different LLMs: Test the same prompt against various models (e.g., GPT-4o vs. Claude 3 Opus vs. Llama 3) side-by-side to find the best one for the job. This is crucial, as a prompt's effectiveness can vary significantly between models.

- Test with diverse inputs: Run the prompt and model configuration against a range of different inputs to check for edge cases and ensure reliability.

- Optimize the prompt: Use the Prompt Canvas feature to have an LLM improve your prompt. ➡️ See the blog post: LangChain Changelog

- Develop and test tool calling: Configure tools and functions that the LLM can call, and test the full interaction within the Playground.

- Refine your app: Run experiments directly against your dataset in the Playground to see changes in real time as you iterate on prompts. Share experiments with teammates to get feedback and collaboratively optimize performance.

Once you are satisfied with a prompt and its configuration in the Playground, you can save it as a new commit to your prompt's history. While the Playground UI is great for experimentation, you can also create and update prompts programmatically for more automated workflows using the LangSmith SDK.

➡️ See the docs: Manage prompts programmatically

➡️ See the SDK reference: client.create_prompt

Manage prompts through different environments

Prompts are not static text; they are a fundamental component of your LLM application's logic, just like source code. A minor change can significantly alter an LLM's response or tool selection, making structured lifecycle management essential. The LangSmith Prompt Hub provides a central workspace to manage this complexity. This guide details the complete workflow for using the Hub to test prompts in development, validate them in staging, and deploy them confidently to production.

Update application prompts based on prompt tags

LangSmith provides a collaborative interface to iterate on prompts and share them with your team. After some initial testing in the Playground, you'll want to see how the prompt interacts within the context of your application. In LangSmith’s Prompt Hub, you can apply prompt commit tags for new versions of the prompt without requiring code changes each time.

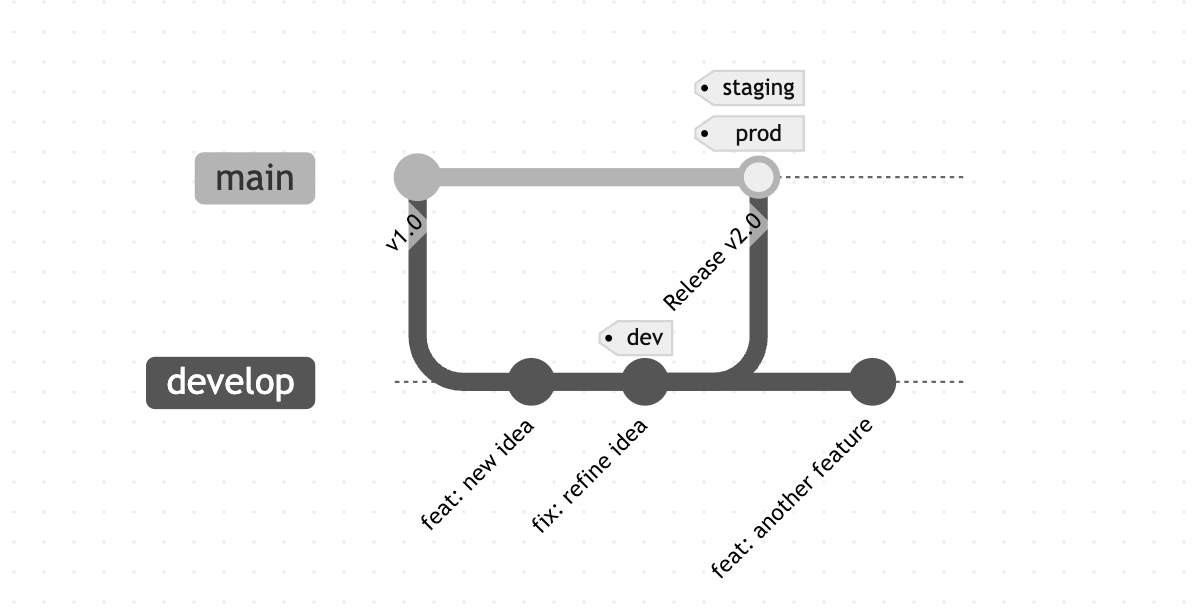

You can assign a meaningful name (e.g., dev, staging, prod) to a specific version (commit) of your prompt. This allows you to create a dynamic reference to the prompt version you want to use in a particular environment.

For instance, you can have a dev tag pointing to the latest, most experimental version of your prompt, a staging tag for a more stable version undergoing final testing, and a prod tag for the version you trust to be in your live application. As you promote a prompt from development to production, you move the tag from one commit to another within the LangSmith UI.

To implement this workflow, you reference the tag in your application code instead of a static commit hash. This enables you to update the prompt in your application without a new code deployment.

How to pull a prompt via commit tag in your environments

LangSmith's Prompt Tags feature is designed for exactly this workflow. Instead of hardcoding a specific prompt version in your application, you reference the tag.

For example, your development environment could pull the prompt tagged dev, while your production application pulls the one tagged prod.

# In your development environment, fetch the latest experimental prompt

prompt_dev = client.pull_prompt("your-prompt-name:dev")

# In your staging environment, fetch the release candidate

prompt_staging = client.pull_prompt("your-prompt-name:staging")

# In production, this code always fetches the stable prompt version currently tagged as "prod"

prompt_prod = client.pull_prompt("your-prompt-name:prod")

➡️ Learn more in the official documentation: Prompt Tags

Best practice: evaluate prompt changes before promotion to production

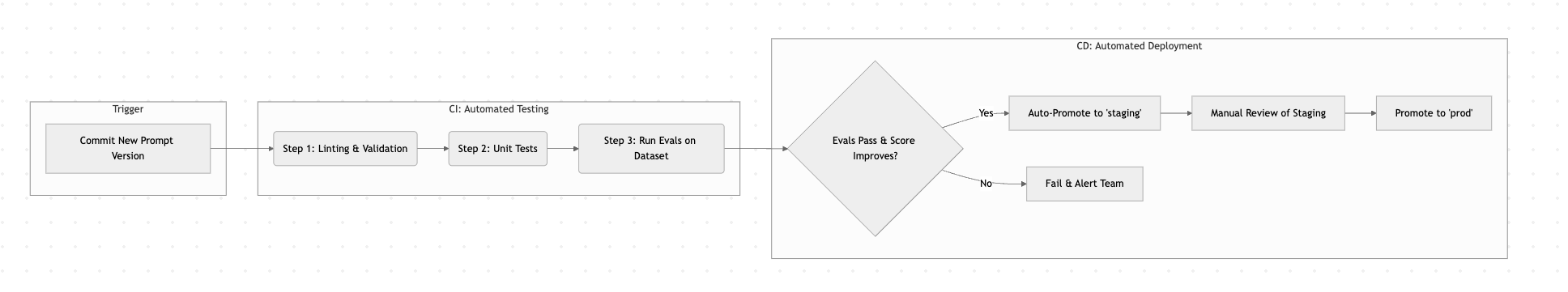

A typical pipeline consists of several automated stages that run in sequence. If any stage fails, the pipeline stops and notifies the team.

- Stage 1: Trigger

The pipeline starts automatically when a new prompt version is created.

- How: This can be a

git pushto your main branch or a webhook triggered from LangSmith on every new prompt commit.

- How: This can be a

- Stage 2: Linting & unit tests

This stage performs quick, low-cost checks.

- Linting: A simple script checks for basic syntax. For example, does the prompt template contain all the required input variables (e.g.,

{question},{context})? - Unit Tests: These verify the structure of the output, not the quality. You can use a framework like

pytestto make a few calls to the new prompt and assert things like:- "Does the output always return valid JSON?"

- "Does it contain the expected keys?"

- "Is the list length correct?"

- Linting: A simple script checks for basic syntax. For example, does the prompt template contain all the required input variables (e.g.,

- Stage 3: Quality evaluation

The new prompt version is run against your evaluation dataset to ensure it meets quality standards.

- How: This can be done in a few ways:

- Programmatic SDK Evals: For checks against known ground truths, a script can use the LangSmith SDK's

evaluatefunction. This executes the new prompt against every example in your dataset, and the results are automatically scored by your chosen programmatic evaluators (e.g., for JSON validity, string matching). - Advanced Qualitative Evals with

openevals: For more nuanced quality checks (like helpfulness, style, or adherence to complex instructions), you can leverage theopenevalslibrary. This library integrates directly withpytestand allows you to define sophisticated, "LLM-as-a-judge" evaluations. You can create tests that use another LLM to score the quality of your prompt's output. The LangSmith integration automatically traces and visualizes all these evaluation runs in LangSmith, which provides a detailed view of the results.

- Programmatic SDK Evals: For checks against known ground truths, a script can use the LangSmith SDK's

- The Check: The pipeline then compares the new prompt's aggregate evaluation scores (e.g., average correctness, helpfulness score, latency, cost) against the current production prompt's scores.

- How: This can be done in a few ways:

- Stage 4: Continuous deployment (promotion)

Based on the evaluation results, the prompt is automatically promoted.

- Pass/Fail Logic: The pipeline checks if the new prompt is "better" than the current one based on criteria such as higher correctness score, no drop in helpfulness, within cost budget.

- Promotion to

staging: If it passes, a script uses the LangSmith SDK to move thestagingtag to this new commit hash. Your staging application, which pulls theyour-prompt:stagingtag, will automatically start using the new prompt. - Promotion to

prod: This is often a manual step, a team member can move theprodtag in the LangSmith UI after reviewing performance in staging. However, if your evaluation pipeline is trustworthy and consistently reflects real-world performance, this step can be automated (e.g., canary deployments with rollback monitoring), and the prod tag can be advanced programmatically using the LangSmith SDK.

Sync prompts in production

For better version control, collaboration, and integration with your existing CI/CD pipelines, synchronizing your LangSmith prompts with an external source code repository will give you a full commit history alongside your application code.

Best practice: use webhooks for synchronization

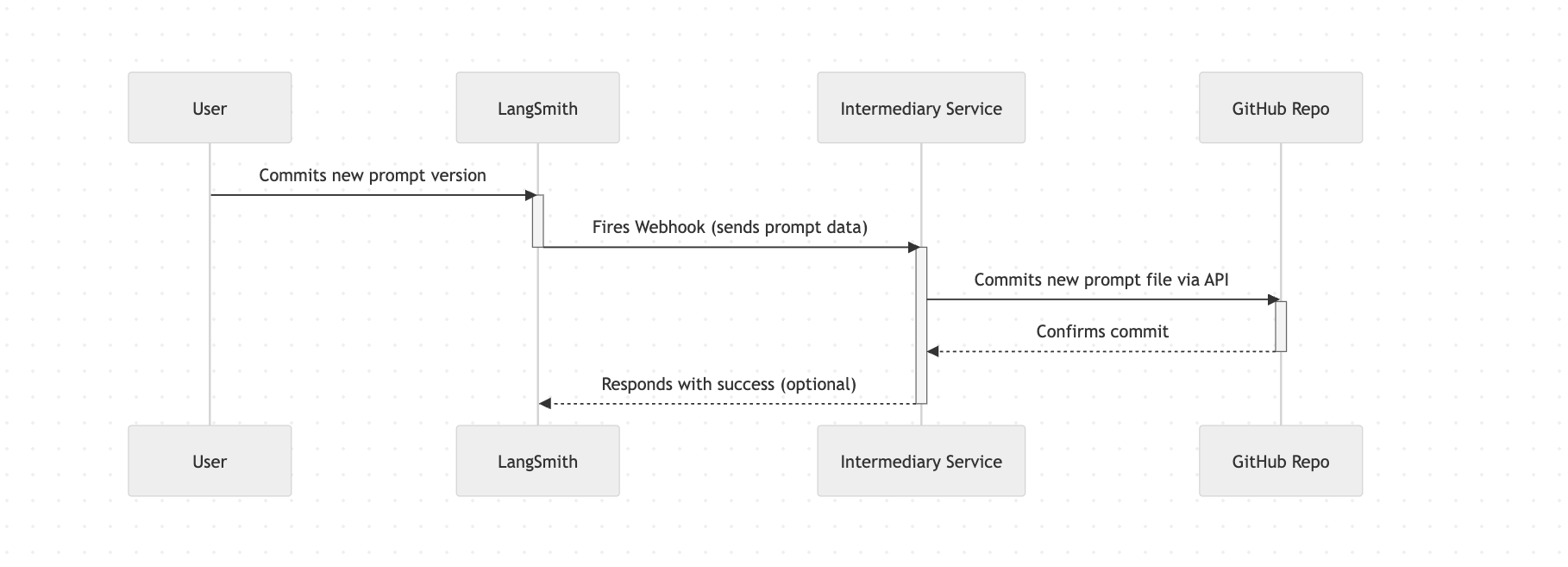

The most effective way to automate this is by using webhooks. You can configure LangSmith to send a notification to a service every time a new version of a prompt is saved. This creates a seamless bridge between the user-friendly prompt editing environment in LangSmith and your version control system.

Webhook synchronization flow

This diagram shows the sequence of events when a prompt is updated in LangSmith and automatically synced to a GitHub repository.

How to implement this with LangSmith

LangSmith allows you to configure a webhook for your workspace that will fire on every prompt commit. You can point this webhook to your own service (like an AWS Lambda function or a small server) to handle the synchronization logic.

➡️ Learn more in the official documentation: Trigger a webhook on prompt commit, How to Sync Prompts with GitHub

Use a prompt in production without repeated API calls

Best practice: cache prompts in your application

To avoid putting an API call to LangSmith in the "hot path" of your application, you should implement a caching strategy. The prompt doesn't change on every request, so you can fetch it once and reuse it. Caching not only improves performance but also increases resilience, as your application can continue to function using the last-known prompt even if it temporarily can't reach the LangSmith API.

Caching strategies

There are two primary strategies for caching prompts, each with its own trade-offs.

- 1. Local in-memory caching

This is the simplest caching method. The prompt is fetched from LangSmith and stored directly in the memory of your application instance.

- How it works: On application startup, or on the first request for a prompt, fetch it from LangSmith and store it in a global variable or a simple cache object. Set a Time-To-Live (TTL) on the cached item (e.g., 5-10 minutes). Subsequent requests use the in-memory version until the TTL expires, at which point it's fetched again.

- Pros: Extremely fast access with sub-millisecond latency; no additional infrastructure required.

- Cons: The cache is lost if the application restarts. Each instance of your application (if you have more than one server) will have its own separate cache, which could lead to brief inconsistencies when a prompt is updated.

- Best for: Single-instance applications, development environments, or applications where ultimate consistency across all nodes is not critical.

- 2. Distributed caching

This approach uses an external, centralized caching service that is shared by all instances of your application.

- How it works: Your application instances connect to a shared caching service like Redis or Memcached. When a prompt is needed, the application first checks the distributed cache. If it's not there (a "cache miss"), it fetches the prompt from LangSmith, stores it in the cache, and then uses it.

- Pros: The cache is persistent and is not lost on application restarts. All application instances share the same cache, ensuring consistency. Highly scalable for large, distributed systems.

- How it works: Your application instances connect to a shared caching service like Redis or Memcached. When a prompt is needed, the application first checks the distributed cache. If it's not there (a "cache miss"), it fetches the prompt from LangSmith, stores it in the cache, and then uses it.

- Best for: Scalable, multi-instance production applications where consistency and resilience are top priorities. Using a service like Redis is the industry-standard approach for robust application caching.